[cs231n] 2. Image Classification - NN, KNN, Linear Classification

Image Classification

컴퓨터는 사람처럼 이미지 자체를 인식하는 것이 아닌, 숫자, 즉 픽셀 값으로 인식한다 !

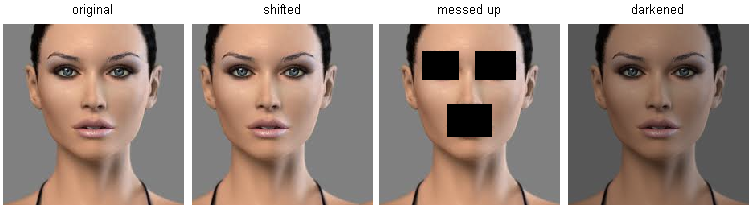

다양한 이미지들의 분류를 위해선 수많은 변화에 대해서 robust한 알고리즘이 필요하다.

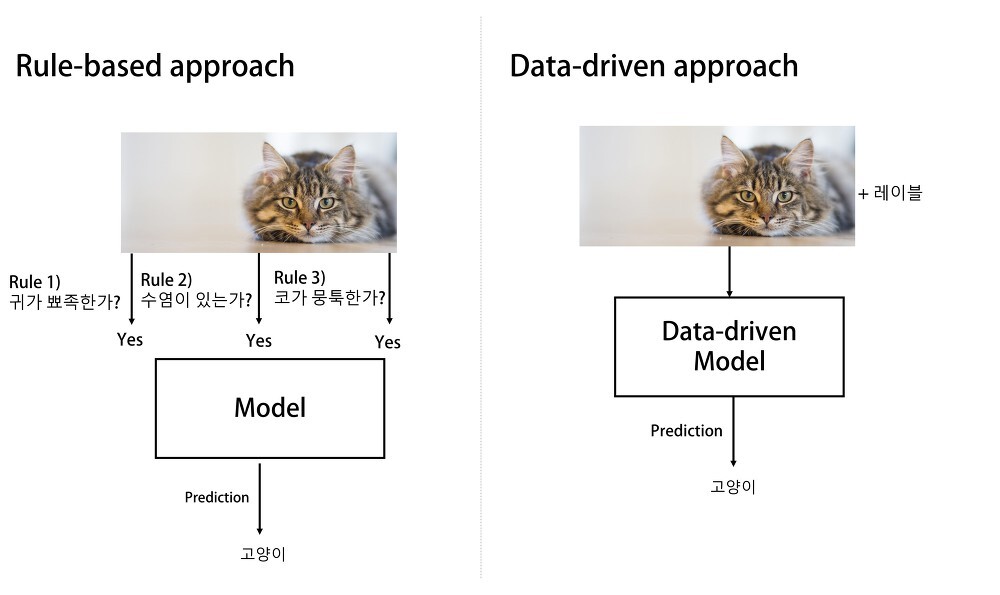

Data-driven Image Classification (데이터 기반 이미지 분류)

기존의 rule-based appraoch는 다양한 클래스들에 적용이 불가능했다.

ex) 고양이는 귀, 눈, 코가 '존재한다' -> 이를 통해 고양이임을 판단

but, 고양이도 종류에 따라 다르다.

사람이 직접 만드는 것이 아닌, 데이터를 기반으로 모델을 만들어 문제를 해결하자!

1. Collect a (large numbers of) dataset of images and labels

2. Use Machine Learning to train a classifier

-> 다양한 객체 인식할 수 있는 모델 만들기

3. Evaluate the classifier on new images

NN (Nearest Neighborhood)

- train data를 저장한 후, 예측 단계에서 투입된 이미지와 가장 가까운 데이터의 레이블을 예측하는 방법

1. memorize all data and labels

2. predict the (new images') label to find the most similar training image

오른쪽을 보면 train image 중 test image와 유사한 순으로 정렬된 것을 볼 수 있다.

* 그렇다면, 이미지와 이미지의 가까운 정도(distance)는 어떻게 구할 수 있을까?

(+) 거리 계산은 동일한 위치 값에서 rgb를 나타내는 pixel값으로

# L1 예시

import numpy as np

class NearestNeighbor:

def __init__(self):

pass

# 단지 Memorize training data (simple)

def train(self, X, y):

""" X is N x D where each row is an example.

Y is 1-dim of size N """

# the nearest neightbor classifier simply remembers all the training data

self.Xtr = X

self.ytr = y

def predict(self, X):

""" X is N x D where each row is an example we wish to predict label for """

num_test = X.shape[0]

# lets make sure that the output type matches the input type

Ypred = np.zeros(num_test, dtype=self.ytr.dtype)

# loop over all test rows

for i in xrange(num_test):

# find the nearest training image to the i'th test image

# using the L1-distance (sum of absolute value differences)

distances = np.sum(np.abs(self.Xtr - X[i, :]), axis=1)

min_index = np.argmin(distances) # get the Index with the smallest distance

Ypred[i] = self.ytr[min_index] # predict the label of teh nearest example

return Ypred

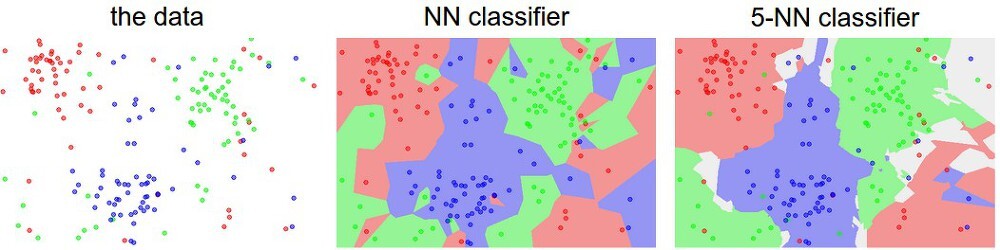

KNN (K-nearest neighbor)

- NN은 단 하나의 label에서만 prediction을 고려하기 때문에 성능이 떨어진다.

테스트(예측) 단계에서 input과 가장 가까운 이웃을 총 k개 찾고, 가장 빈번하게 나온 레이블로 예측하는 방법

(voting: 이렇게 여러 개로부터 가장 빈번히 나온 것을 (투표) 예측 결과로 하는 작업)

KNN은 이상치에 더 둔감하다.

unseen data에 대한 성능(generalization)이 더 높다!

(머신러닝의 목적: unseen data를 올발게 예측하는 것)

* 최적의 모델을 찾기 위해선?

하이퍼 파라미터 (hyperparameter)을 조정하자!

KNN에서는 k와 distance function

- 여러 번 반복하며 가장 좋은 것 찾기

but, hyperparameter를 찾는데 test set을 사용할 수 없음

- test set은 성능 평가하는 부분에서만 사용되어야 함!

- training set의 일부를 hyperparameter tuning을 위해 분할하여 validation set을 생서한다.

- NN은 이미지 분류에 좋은 성능을 보이지 않는 경향이 있다.

Why?

1. prediction is very slow

2. 픽셀들 간의 difference가 이미지의 유사성(or 차이)를 잘 나타내지 못함

3. Curse of dimensionality (차원의 저주)

- 알고리즘을 잘 동작하게 하려면 기하 급수적(차원의 제곱)인 양의 training data가 필요한데,

실제 이는 불가능하고 지수승의 data의 성능은 매우 떨어짐

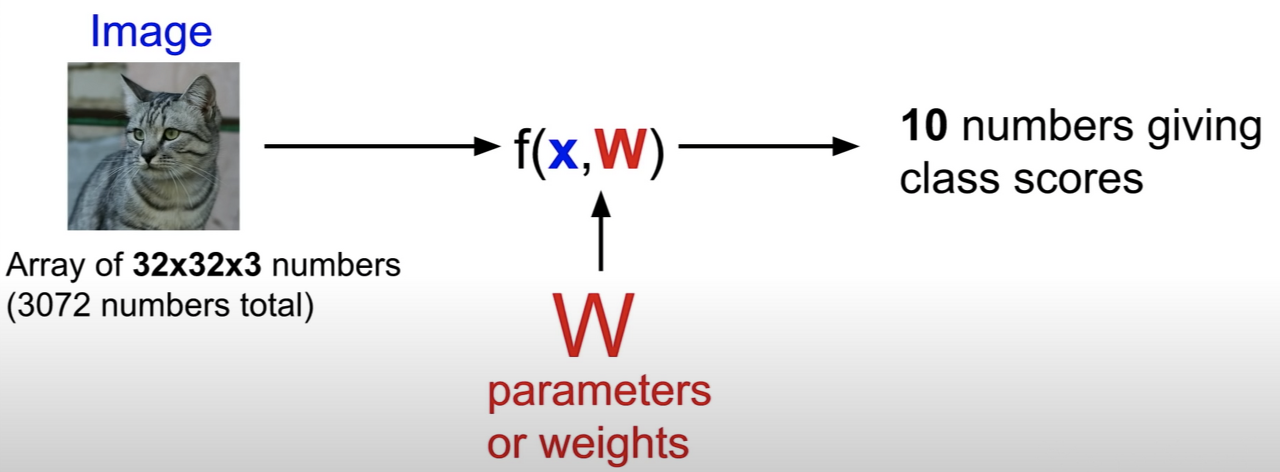

Linear Classifier

Image Classification에 있어 KNN보다 좀 더 강력한 접근법

Parametric Approach

f(x, W) = Wx + b

- f의 입력값: x, W

- x: 입력 데이터

- W: 파라미터

- b(bias): unseen data를 분류하기 위해 도움을 줌

- 데이터와 독립적으로 존재 -> input data에 독립적으로 만듬

가장 simple한 방법은 x와 W를 곱해주는 것

- 행렬 곱셈 (내적)

앞으로 W(파라미터)의 값을 개선시켜나가며 좋은 성능을 내는 분류기를 만들도록 할 것이다.

Reference

- https://www.youtube.com/watch?v=OoUX-nOEjG0

- https://3months.tistory.com/512

- https://worthpreading.tistory.com/46